plus-the-importance-of-using-proper-search-terms

(Please note: this post is not about this kind of beatsyncing, but might be a starting point for apps that need more sophisticated control over audio timing.)

Rhythm is a big component of Double Dynamo’s gameplay. I spent the last couple of weeks integrating the brand new audio. It was tricky to get everything to work right, and mostly not for the reasons I was expecting. I'll quickly go over how I needed the audio to behave, the problems I ran into, how I worked around the problems, and what issues still remain.

Taking a cue from Super Hexagon, DD's tracks are, well, dynamic: each track leads into the next seamlessly. At minimum the gameplay and the music need to be synchronized impeccably (see classic rhythm games like DDR). Also, audio decoding can have performance implications:

Hardware-assisted decoding provides excellent performance—but does not support simultaneous playback of multiple sounds. If you need to maximize video frame rate in your application, minimize the CPU impact of your audio playback by using uncompressed audio or the IMA4 format, or use hardware-assisted decoding of your compressed audio assets.

Cocos2d’s standard audio engine, while adequate for basic background audio and sound effects, cannot support these requirements. Not only is it impossible to play tracks back to back without a noticeable gap, but there is no way to access timing data on a playing audio stream, which makes any kind of synchronization impossible.

Gapless Playback

Stuck. After my first day of working on the problem I started to seriously consider a license to FMod or BASS as suggested in some forum threads. And then I ran across this post on the Starling/Sparrow blog. If only I had known to search for "gapless" rather than "seamless"!

I'm using IMA4 compression rather than MP3, which bypasses the inherent problems with that codec (the linked solution reminds me of techniques for generating seamless textures, for which by the way "seamless" is a perfectly fine search keyword). The link to the library that uses Apple's Audio Queue Services was exactly what I was looking for. It also turns out that Apple has some pretty straightforward sample code that does roughly the same thing.

The short version of how this works is this: when you create a new output audio queue you allocate a set of buffers (typically 3 of them) and define a callback that fills those buffers one at a time with audio data from whatever sources you please (in my case, from a list of IMA4-compressed audio files, one at a time). The queue then plays the audio data from the buffers and passes old buffers back to the callback to be replenished. The only restriction is that the data format needs to be consistent across all sources.

There are no gaps in playback! And provided you only play one queue at a time, Audio Queue Services will decode your audio in hardware. Success!

Below is roughly what I'm using for my callback, which is where the interesting stuff happens. The main difference between my code and the examples given is that I'm using an AQPlayer object rather than a plain struct.

void AQPlayer::handleOutputBuffer(void *aqData, AudioQueueRef inAQ, AudioQueueBufferRef inBuffer)

{

AQPlayer *player = (AQPlayer *)aqData;

UInt32 numBytesReadFromFile;

UInt32 numPackets = player->m_numPacketsToRead;

AQPlayer::checkStatus(

AudioFileReadPackets(

player->m_audioFile,

false,

&numBytesReadFromFile,

player->m_packetDesc,

player->m_currentPacket,

&numPackets,

inBuffer->mAudioData

),

"failed to read packets!"

);

if (numPackets > 0)

{

inBuffer->mAudioDataByteSize = numBytesReadFromFile;

bool enqueued = AQPlayer::checkStatus(

AudioQueueEnqueueBuffer(

inAQ,

inBuffer,

(player->m_packetDesc ? numPackets : 0),

player->m_packetDesc

),

"failed to enqueue audio buffer"

);

if (enqueued)

{

player->m_currentPacket += numPackets;

}

}

else

{

// next track in playlist

string audioFileName = player->m_playlist->advanceToNextTrack();

if (audioFileName != "")

{

player->openAudioFile(audioFileName);

player->copyMagicCookie();

AQPlayer::handleOutputBuffer(aqData, inAQ, inBuffer);

}

else

{

player->stop();

}

}

}Caveats

See that line that reads player->m_playlist->advanceToNextTrack()? Keep in mind that the callback is not called from the same thread as the one where you're setting up the playlist, so standard rules for thread safety apply.

Also, errors are common and ultimately unavoidable, so take time to think through a clear strategy for error handling.

(I still have some work to do in both these areas.)

Sync or Swim

The part that I thought would be difficult, synchronizing the gameplay to the beat, turned out to be comparatively easy once I was able to play a gapless audio stream and query its play duration via AudioQueueGetCurrentTime().

I decided up front to limit the number of possible tempos so that I wouldn't have to change playback speeds or have too many large media files, and the music files are set up so that the beat is perfectly aligned to the start and end of the track. As a result, as long as you never change tempo the total play time is the only thing you need to know in order to locate the beat. Even with looping and track switching.

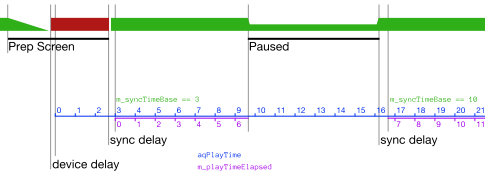

It was a little tricky to get pause/resume behavior just right. I used this diagram to stay on track:

The thickness of the green (and red) bars at the top represents the volume gain. In the Prep Screen, the previous track fades out, and then we play a very short rhythm track on loop until the user starts the level. Once gameplay starts, we need to wait for the next beat of the music before generating synchronized game events. Actual time in game (purple) is kept separate from the audio play time (blue), but is synchronized at game start and at unpause.

Remaining Issues

But, you say, you're using an Apple-only library! How are you going to make this work on Android? Good question! One I don't yet have an answer to. I will say that I have made an effort to limit my dependencies to a pretty minimal API, so that, while audio handling is not currently as modular as it could be, it won't be too much work to fix it later on.

Couldn't I have gotten it to work with OpenAL? Probably. Honestly, I found a lead that seemed promising and ran with it. I wish I had the time and energy to properly research all of my technical choices, but I am a mere mortal. If I am fortunate enough to be able to work on an Android port of Double Dynamo, I will undoubtedly be learning more about audio programming.

What about thread safety? Oh, yeah, well, it seems pretty stable for now, but there are some bugs. I'm going to work on those.

Photo credits:

- two turntables... by web4camguy on flickr (CC BY-SA 2.0)

Like Double Dynamo? Sign up for the mailing list to keep up to date!